#33 Why You Should Read More Engineering Blogs

One of the early mistakes I made in my career was not reading engineering blogs. I used to think that the only way for an engineer to deepen their knowledge was by reading textbooks like Designing Data-Intensive Apps by Martin Kleppman, Fundamentals of Software Architecture, and so on. While these are great references to have, they often miss the real-world challenges engineers face when architecting solutions for their customers, especially at scale. A great way to learn new patterns or solutions is through stories. That’s where engineering blogs can help.

In this post, I’ll highlight some example stories and share my 25 favourite engineering blogs.

Why should I read engineering blogs?

Say you’re in product team (A) focused on developing a component like search service. There’s team (B) working on a payment service, and team (C) working on the product’s infrastructure. If one engineer from each team gave a ~30min talk to share new findings or solutions they’ve tried, you’d want to attend, right?

Now instead of 30-min talks, their findings / solutions are shared as written documents. And instead of just internal teams sharing their work, these documents come from teams across various companies. The range of stories is now virtually endless, with something interesting and relevant for every engineering team.

A good engineering story includes:

Challenge/Struggle

Ideas

Solution

Next Steps

Academic textbooks often take time to include new industry-tested ideas. So, to avoid “knowledge staleness”, it’s important to combine work experience with modern solutions to build a strong understanding of what works and what doesn’t. Solutions aren’t always as straightforward as some textbooks may suggest.

So my advice for software engineers is this:

Deepen your knowledge through work experience and by reading books and engineering blogs.

Here are 8 blog posts where engineers share how they solved an interesting problem, including what worked and what didn’t.

Engineering blog stories

1. Netflix - Introducing Impressions (2025)

Netflix wrote a post about building a system to track every image a user hovers over on the homepage, called “impressions”, to improve personalised recommendations. They explained why tracking impression history matters (to avoid overexposure, highlight new releases, and improve analytics).

They shared interesting stats about their new system, processing from 1 to 1.5 million events per second using technologies like Apache Flink, Kafka, and Iceberg. They also talked about their system’s qualities like ensuring high data quality, and their future plans to automate performance tuning and improve alerting.

Link: https://netflixtechblog.com/introducing-impressions-at-netflix-e2b67c88c9fb

2. Canva - Supporting real-time mouse pointers (2024)

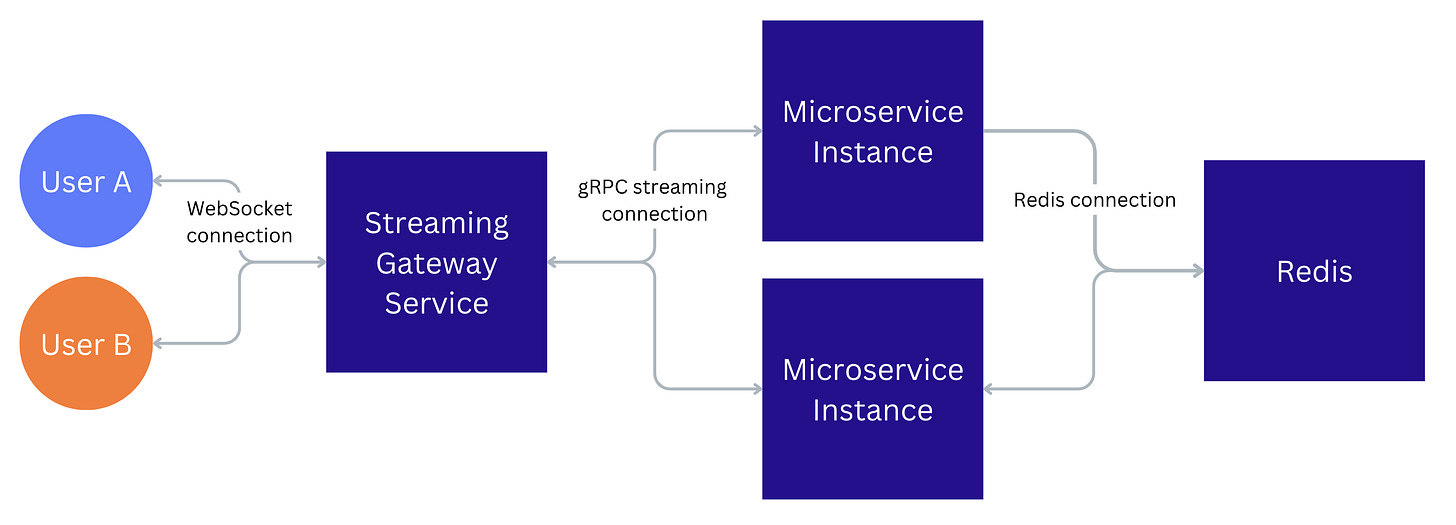

Say you are asked to design an edit collaboration experience (like Canva) based on customer research. You discuss product requirements and trade-offs, and after some thinking, you might arrive at a solution that requires websockets for bidirectional communication with a coordination component (e.g., etcd or ZooKeeper), or a pub/sub model (e.g., Redis).

That’s what Canva initially did. They served 100,000 users using websockets and Redis pub/sub for real-time interactions.

However, Canva faced a scalability issue with supporting real-time mouse updates, which were capped at 3 updates / sec. They shared in their blog post how they transitioned from a Websockets + Redis-based architecture (Milestone 1) to a WebRTC-based architecture (Milestone 2) to achieve a much higher update rate of 60 updates / sec.

Their unique infrastructure problem is a great example of how production engineers manage stateful connections at scale while carefully weighing the trade-offs between technologies that power a great product like Canva.

Link: https://www.canva.dev/blog/engineering/realtime-mouse-pointers

3. Figma - A 9-month journey of sharding PostgreSQL for “infinite” scale (2024).

Figma wrote a blog post about their journey with horizontal sharding on their SQL database. They shared useful insights on database sharding (logical vs physical sharding). They shared some interesting stats in their blog. For example, new tables sharded in production experienced only 10 seconds of partial downtime. It’s an interesting read for those managing databases at scale.

Link: https://www.figma.com/blog/how-figmas-databases-team-lived-to-tell-the-scale

4. Discord - how they store billions trillions of messages! (2023)

Discord shared in their 2017 blog post how they were managing 120 million messages / day using Cassandra with a team of only four backend engineers! However, they later faced performance issues with Cassandra as their messaging infrastructure grew to store trillions of messages. As a result, they decided to migrate to ScyllaDB for handling the new write-throughput. They go into technical details about how they managed the database migration and celebrated how ScyllaDB helped them handle massive data during high-traffic events like the World Cup. Here’s a highlight:

“At the end of 2022, people all over the world tuned in to watch the World Cup. One thing we discovered very quickly was that goals scored showed up in our monitoring graphs. This was very cool because not only is it neat to see real-world events show up in your systems, but this gave our team an excuse to watch soccer during meetings. We weren’t “watching soccer during meetings”, we were “proactively monitoring our systems’ performance.””

Links:

(2017) https://discord.com/blog/how-discord-stores-billions-of-messages

(2023) https://discord.com/blog/how-discord-stores-trillions-of-messages

5. Snap - using QUIC for Snapchatters (2021)

The QUIC protocol was designed in 2012 as a replacement for TCP to reduce network latency. I had read about this protocol before, but I had never read stories about using QUIC in production. Snap shared their story of how QUIC reduced network latency for live videos, a critical feature for their users (Snapchatters).

Link: https://eng.snap.com/quic-at-snap

6. Shopify - Building resilient payment systems.

Shopify shared 10 tips for building resilient payment systems in a blog post. While the details are high-level, their sixth tip on Idempotency Keys stood out as an interesting observation. They recommend using ULID instead of UUID4 as it performs better with B-tree–based indexes due to its sorted nature. Here’s a highlight:

“In one high-throughput system at Shopify we’ve seen a 50 percent decrease in INSERT statement duration by switching from UUIDv4 to ULID for idempotency keys.”

Link: https://shopify.engineering/building-resilient-payment-systems

7. Uber - Kubernetes migration journey (2025)

Uber initially used Apache Mesos as their container orchestration framework to manage their platform. However, they decided to move away from Mesos (which they announced as deprecated in 2021) and migrate to Kubernetes. This decision posed significant challenges related to scale, reliability, and integration. They first defined their migration principles and explained why they had to rebuild all their integrations from scratch. It’s an interesting read about Uber’s year-and-a-half journey migration from Mesos to Kubernetes.

Link: https://www.uber.com/blog/migrating-ubers-compute-platform-to-kubernetes-a-technical-journey/

8. Stripe - Building interactive docs with Markdoc

Stripe shared how they rebuilt their documentation with Markdoc, a custom format that simplifies the authoring experience for developers. It’s an interesting tool that boosts both developer productivity and documentation quality. If you care about great docs, this one’s a must-read.

Links:

Top 25 engineering blogs

P.S. My recommendation is not to read all these blogs. I recommend picking the ones that tackle a similar problem you’re facing or cover a topic you’re curious about.

Here are my favourites:

Let me know your favourite blogs or share your recommendations!

P.S. If you’re finding this newsletter valuable, share it with a friend, and consider subscribing if you haven’t already.

Great list of resources!

While I am not an engineer, I follow a similar format of guiding my readers through a challenge, ideas and a solution.